What is the Offline Categorization Database?

Our offline categorization database is a comprehensive, downloadable repository containing pre-categorized data for over 30 million domains. This represents approximately 99.5% of the active, trafficked internet, providing unprecedented coverage for applications that demand instant lookups without API latency.

Unlike API-based solutions that require network calls and incur round-trip latency, our offline database allows you to perform categorization lookups directly from your own infrastructure. This eliminates network overhead, reduces costs for high-volume operations, and ensures consistent performance regardless of internet connectivity or API availability.

The database is meticulously maintained and updated regularly to reflect the evolving internet landscape. Each domain entry includes comprehensive categorization data across multiple taxonomies, confidence scores, enrichment metadata, and additional intelligence gathered from our continuous web crawling and analysis operations.

30M+

Pre-Categorized Domains

99.5%+

Active Internet Coverage

<1ms

Lookup Latency

Monthly

Database Updates

Why Choose an Offline Database Solution?

While real-time API categorization offers flexibility for dynamic content and newly discovered URLs, many enterprise use cases benefit significantly from offline database deployments. Understanding when to leverage offline databases versus API calls is crucial for optimizing your architecture.

Ultra-Low Latency Requirements: Applications that cannot tolerate even minimal API latency benefit enormously from offline databases. Real-time bidding (RTB) platforms making sub-100ms decisions, high-frequency trading systems evaluating domain reputations, and network security appliances filtering traffic at line speed all require instant lookups that only local databases can provide. Our offline database enables sub-millisecond lookups when properly indexed, orders of magnitude faster than any network-based API.

High-Volume Processing: When categorizing millions or billions of URLs daily, API costs and rate limits become significant constraints. An offline database eliminates per-request costs and throughput limitations. A single database deployment can handle unlimited lookup volume, making the economics compelling for large-scale operations. Organizations processing user browsing history, analyzing historical web crawl data, or filtering high-traffic network streams find offline databases dramatically more cost-effective than API alternatives.

Air-Gapped and Restricted Environments: Many enterprise and government environments operate networks isolated from the public internet for security reasons. Offline databases enable categorization capabilities in these air-gapped environments where API access is impossible. Similarly, environments with restrictive network policies, limited bandwidth, or unreliable connectivity benefit from self-contained database solutions that don't depend on external services.

Data Privacy and Compliance: Organizations with strict data privacy requirements may be unable or unwilling to send URLs to external APIs for categorization, as this could expose sensitive information about user behavior, internal systems, or proprietary research. Offline databases enable categorization while keeping all data within organizational boundaries, satisfying compliance requirements for GDPR, HIPAA, financial regulations, and internal security policies.

Consistent Performance and Availability: API-dependent systems face risks from network issues, API service disruptions, or DDoS attacks against external services. Offline databases eliminate these external dependencies, providing consistent performance and availability controlled entirely by your own infrastructure. For mission-critical systems where downtime is unacceptable, this independence is invaluable.

Hybrid Architecture Optimization: The most sophisticated implementations combine offline databases with API fallback. The database handles the 99.5% of URLs already categorized, while API calls supplement the database for the small percentage of newly registered domains or long-tail URLs not yet included. This hybrid approach optimizes cost, performance, and coverage.

Database Coverage and Content Quality

Our 30 million domain database is carefully curated to maximize practical utility and coverage of actual internet traffic. Rather than including millions of parked domains, abandoned sites, or spam URLs, we focus on active, trafficked domains that users actually visit and encounter.

The database includes comprehensive coverage across domain categories:

- Major Web Properties: All significant websites including the top 10 million domains by traffic, ensuring coverage of sites users actually visit

- E-commerce Platforms: Extensive coverage of online retailers, marketplaces, and commercial sites across all major categories

- News and Media: Global news sources, regional publications, entertainment sites, and media platforms

- Social Networks and Communities: Social media platforms, forums, community sites, and user-generated content platforms

- Business and Professional: Corporate websites, B2B platforms, professional services, and industry-specific resources

- Educational and Reference: Universities, educational resources, reference sites, and knowledge platforms

- Technology and Development: Software companies, development resources, technology platforms, and technical communities

- Geographic Coverage: Balanced representation across regions including North America, Europe, Asia, Latin America, and other global markets

Each domain entry undergoes our comprehensive categorization process, leveraging the same advanced machine learning models that power our real-time API. This ensures consistency between our database and API products, allowing seamless hybrid implementations where databases and APIs complement each other.

Multi-Taxonomy Classification Coverage

Every domain in our offline database includes categorization across all major industry taxonomies, providing versatility for diverse use cases without requiring multiple data sources:

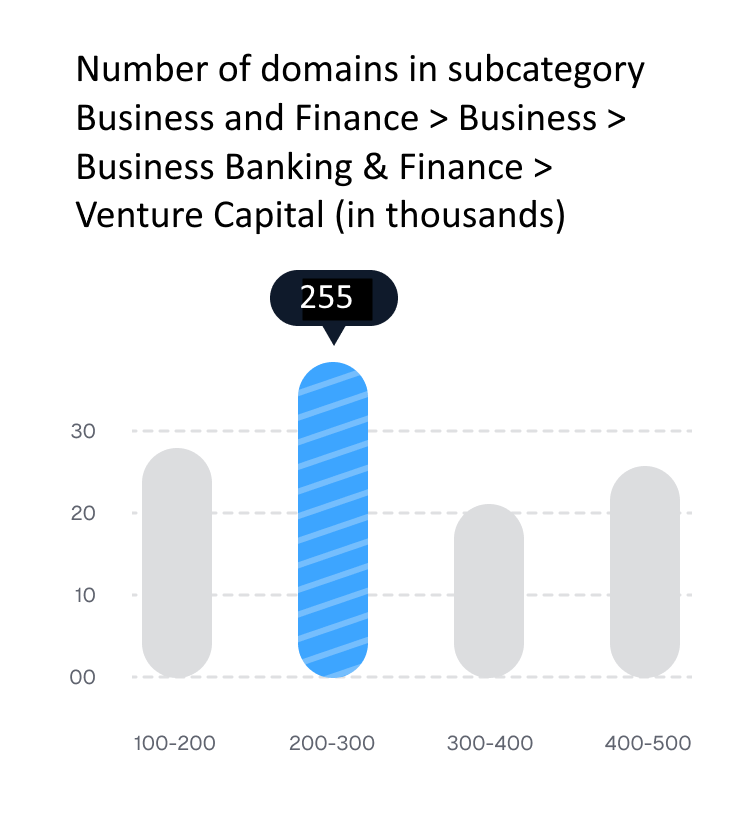

IAB Content Taxonomy: Full categorization using both IAB Taxonomy version 2.0 (698 categories) and version 3.0 (703 categories). These industry-standard taxonomies are essential for digital advertising, ad tech platforms, and brand safety applications. Our database includes complete hierarchical paths, allowing filtering at any tier level from broad topics down to specific subcategories.

IPTC NewsCodes: For news and media applications, every news-related domain includes detailed IPTC classification across 1,124 categories. This specialized taxonomy enables sophisticated news categorization, content recommendation, and editorial automation systems to precisely classify and route content.

Google Shopping Taxonomy: E-commerce domains are categorized using the 5,474-category Google Shopping taxonomy, enabling product categorization, shopping feed optimization, and e-commerce analytics applications to understand product categories and merchant specializations accurately.

Shopify Taxonomy: The comprehensive 10,560-category Shopify taxonomy provides extremely granular e-commerce classification, particularly valuable for merchants, marketplace platforms, and e-commerce tools requiring detailed product categorization.

Amazon Category Taxonomy: With 39,004 categories, the Amazon taxonomy offers the most detailed e-commerce classification available. This extensive taxonomy enables sophisticated product matching, competitive analysis, and marketplace optimization applications.

Web Content Filtering Taxonomy: Our proprietary 44-category filtering taxonomy addresses web security, parental controls, and content policy applications, classifying domains by suitability and risk factors rather than just content topics.

Database Formats and Deployment Options

We provide the offline database in multiple formats to accommodate diverse technical environments and use case requirements:

CSV Format: Standard comma-separated values files provide universal compatibility with virtually any data processing tool, programming language, or database system. CSV files are ideal for importing into existing databases, processing with data analysis tools, or integrating into custom applications. We provide both full database exports and incremental update files in CSV format.

JSON Format: Structured JSON files provide rich data representation ideal for modern applications, APIs, and JavaScript-based systems. JSON format preserves hierarchical category structures, nested enrichment data, and complex metadata more naturally than flat CSV files. We provide both single-file and sharded JSON exports for scalability.

SQLite Database: Pre-built SQLite database files offer immediate queryability without requiring database setup or import processes. SQLite provides excellent read performance, minimal overhead, and simple integration into applications. This format is particularly popular for embedded applications, mobile apps, and systems where deploying full database servers is impractical.

PostgreSQL and MySQL Dumps: For enterprise deployments using standard relational databases, we provide optimized database dumps that can be directly loaded into PostgreSQL or MySQL instances. These dumps include properly indexed tables and optimized schemas for fast lookups.

Parquet Format: For big data and analytics applications using Apache Spark, Hadoop, or cloud data warehouses like Snowflake and BigQuery, we provide Parquet format exports. Parquet's columnar storage offers excellent compression and query performance for analytical workloads.

Elasticsearch Indices: Pre-built Elasticsearch indices enable powerful full-text search and complex query capabilities. This format is ideal for applications requiring fuzzy matching, multi-field searches, or integration with existing Elasticsearch infrastructure.

Redis RDB Files: For applications demanding absolute minimum latency, we provide Redis database files offering in-memory lookup performance. Redis is ideal for high-frequency lookup scenarios where sub-millisecond response times are critical.

Database Schema and Data Structure

Our offline database employs a carefully designed schema that balances comprehensiveness with query performance. Understanding the data structure helps developers integrate the database effectively and extract maximum value.

The core domain record includes:

- Domain Identifier: Normalized domain name serving as the primary key

- Categories by Taxonomy: Complete categorization results for each supported taxonomy, including hierarchical paths and confidence scores

- Primary Category: The highest-confidence category assignment for quick filtering

- Last Updated: Timestamp indicating when this domain was last analyzed

- Traffic Rank: Approximate global traffic ranking indicating domain popularity

- Language Detection: Primary language or languages detected on the domain

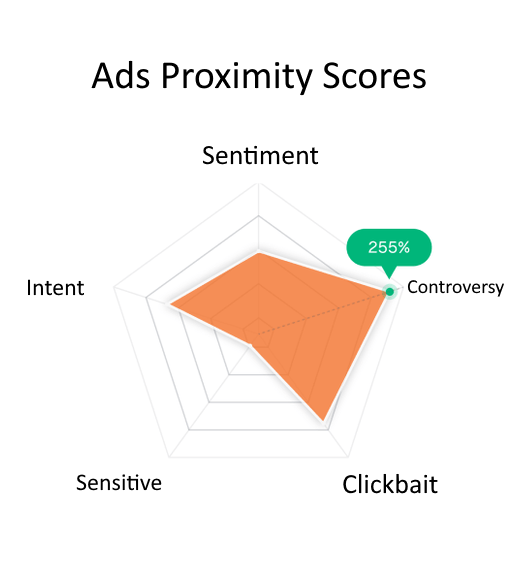

- Safety Flags: Binary indicators for malware, phishing, adult content, and other risk factors

- Enrichment Data: Structured JSON containing buyer personas, entities, topics, technologies, and other metadata

For optimal query performance, we recommend indexing on domain name, primary category, traffic rank, and any frequently filtered fields specific to your use case. Our documentation includes specific indexing recommendations for each database format.

Update Frequency and Incremental Updates

The internet evolves constantly, with domains changing content, purposes, and categories over time. Our database update strategy ensures your local data remains current while minimizing the overhead of updates.

Monthly Full Database Releases: Each month, we publish a complete database snapshot incorporating all new domains, updated categorizations, and removals of dead or inactive domains. Full database releases provide a clean baseline and ensure long-term accuracy.

Weekly Incremental Updates: Between monthly releases, we provide weekly incremental update files containing only changed records. These smaller files enable you to keep your database current without downloading the entire 30 million domain dataset. Incremental updates include new domain additions, category changes for existing domains, and flagged deletions.

Daily High-Priority Updates: For rapidly changing domains in news, trending topics, and emerging threats, we offer optional daily update feeds. These feeds are considerably smaller, typically containing hundreds to thousands of records, and focus on domains experiencing significant changes or newly registered domains entering the top traffic tiers.

Our update files include version metadata and checksums for validation, ensuring data integrity during download and import processes. We provide scripts and tools to automate the update process for various database formats.

Technical Implementation Guidance

Successful deployment of our offline database requires consideration of several technical factors:

Storage Requirements: Database size varies by format. Compressed CSV files require approximately 15-20GB of storage, while uncompressed formats may require 40-60GB. In-memory databases like Redis need sufficient RAM to hold the entire dataset, typically 30-50GB depending on which fields you load. Plan storage with headroom for growth as we continue expanding coverage.

Import Performance: Initial database import is the most time-consuming operation. CSV imports into relational databases can take several hours depending on hardware and indexing strategy. SQLite databases can be used immediately without import. We recommend importing during maintenance windows and using provided import scripts optimized for each database type.

Query Optimization: Lookup performance depends on proper indexing. For domain lookups, a simple hash index on the normalized domain name provides near-instant lookups. For category-based queries, composite indexes on category fields improve performance. Our documentation provides database-specific indexing recommendations based on common query patterns.

Normalization Considerations: Domains in our database use normalized forms (lowercase, without protocol prefixes). Your application should normalize user input to match. We provide normalization functions in our sample code for common languages to ensure consistent matching.

Handling Missing Domains: No offline database can cover 100% of domains, particularly newly registered ones. Your implementation should include fallback logic for domains not found in the database. Common strategies include API fallback for missing domains, caching API results locally, or applying default categorization rules.

Multi-Instance Deployments: Large-scale applications often deploy multiple database instances across servers, data centers, or regions. Our database is read-only after import, making it straightforward to replicate across instances without synchronization complexity. Update processes can refresh each instance independently or use centralized update distribution.

Hybrid Database and API Architecture

The most powerful implementations combine our offline database with our real-time API, leveraging the strengths of each approach:

In a hybrid architecture, the offline database handles the vast majority of lookups for established domains, providing instant results with zero API costs. When a domain is not found in the database or data is older than your freshness threshold, the system falls back to our real-time API for current categorization. Results from API calls can be cached locally or persisted to a supplementary database, reducing future API usage.

This approach delivers the best of both worlds: sub-millisecond performance and zero marginal cost for 99.5% of lookups, with comprehensive coverage through API fallback for the remaining long-tail. Many customers report 95-98% of their queries being satisfied by the offline database, with only 2-5% requiring API calls.

For optimal hybrid implementations, we recommend:

- Checking the offline database first for every lookup

- Implementing configurable age thresholds for when to refresh via API

- Caching API results locally with appropriate TTL values

- Using asynchronous API calls for non-critical lookups

- Batching multiple API requests when possible

- Monitoring database hit rates to optimize caching strategies

- Scheduling regular database updates during low-traffic periods

Use Case Examples

Ad Tech Platform Implementation: A programmatic advertising platform processes 500 million bid requests daily, each requiring instant domain categorization for contextual targeting. Deploying our offline database across their bidding infrastructure enables sub-millisecond categorization lookups, with 99.2% of requests satisfied from the database. API fallback handles newly registered domains, with results cached for 24 hours. This hybrid approach reduced infrastructure costs by 85% compared to pure API implementation while improving response times.

Enterprise Web Security Gateway: A global enterprise deploys our database on their web security appliances filtering traffic for 100,000 employees across 50 offices. The offline database enables real-time content filtering at line speed without introducing latency. Monthly database updates ensure policy enforcement remains current. The deployment eliminated dependency on external categorization APIs, improving reliability and performance while satisfying data residency requirements.

Market Research Analytics Platform: A competitive intelligence service analyzes 200 million URLs monthly from web crawls, analyzing competitive landscapes across industries. The offline database categorizes 98% of discovered URLs instantly, enabling real-time analytics dashboards. API integration handles the remaining 2% of niche or newly discovered sites. Database deployment reduced monthly API costs from $50,000 to under $3,000 while improving processing speed by 10x.

Mobile Application Integration: A parental control mobile app embeds our SQLite database directly in the application, enabling offline content filtering without requiring internet connectivity. The 50MB compressed database provides comprehensive categorization for all significant websites, with automatic updates downloaded monthly via the app. This approach eliminates privacy concerns about sending user browsing data to external services while ensuring consistent protection regardless of network availability.

Licensing and Deployment Models

We offer flexible licensing to accommodate different organizational needs and use cases:

Single Instance License: Appropriate for single-server deployments or applications with limited scale. Includes one database instance and monthly updates. Ideal for small businesses, development environments, or specialized applications.

Multi-Instance License: Covers deployments across multiple servers, data centers, or applications within a single organization. Includes unlimited internal deployments with volume discounts based on scale. Most popular for enterprise deployments.

OEM and Redistribution Licenses: For vendors building products or services that incorporate our data for end customers. Flexible terms based on your business model and redistribution scope. Popular for security vendors, ad tech platforms, and data providers.

Custom Enterprise Agreements: Large enterprises with unique requirements can work with our team to structure custom agreements addressing specific technical, legal, or commercial needs. We accommodate special requirements for data formats, update frequencies, geographic restrictions, and compliance frameworks.

Support and Documentation

Database customers receive comprehensive support resources including detailed implementation guides for each database format, sample code in Python, Java, JavaScript, PHP, and other languages, schema documentation and data dictionaries, performance tuning guides, and best practices documentation based on successful customer implementations.

Enterprise customers receive dedicated technical support including implementation assistance, architecture consulting, and priority support channels. Our team has extensive experience helping customers successfully deploy and optimize offline database implementations across diverse use cases and technical environments.

Data Quality and Accuracy Guarantees

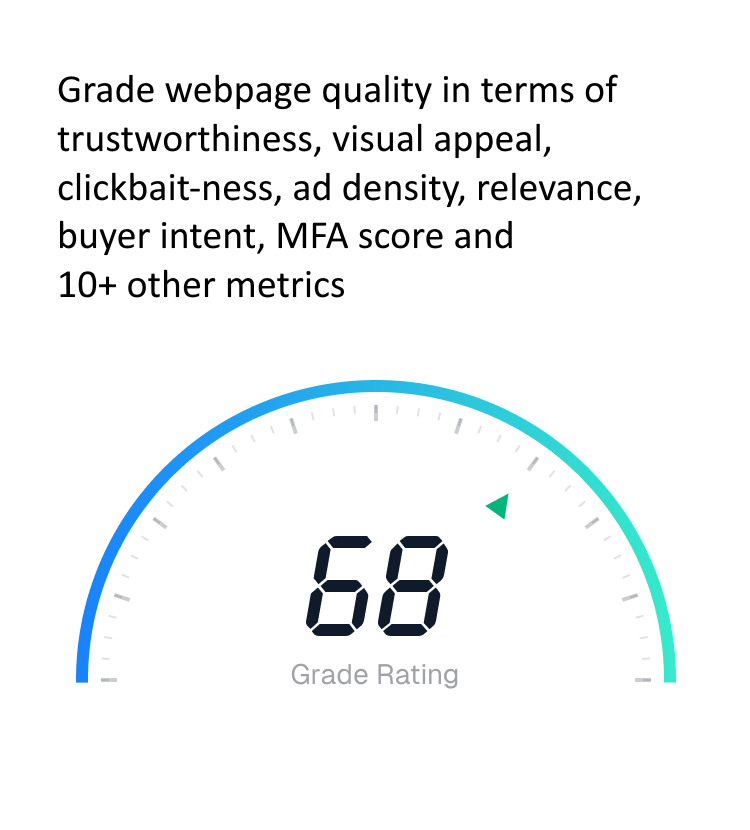

Our offline database maintains the same industry-leading accuracy standards as our real-time API. Every domain undergoes comprehensive analysis using our advanced machine learning models, natural language processing, and computer vision systems. We continuously validate categorization accuracy through automated testing and manual review processes.

Quality assurance processes include regular sampling and validation against human-labeled datasets, automated consistency checking across taxonomies, monitoring for categorization changes that may indicate errors, customer feedback integration and rapid correction processes, and continuous model improvement and retraining with updated data.

We maintain accuracy rates consistently exceeding 99% for primary category assignments across all major taxonomies, matching the quality standards of our real-time API service.

Getting Started with the Offline Database

Evaluating whether our offline database meets your needs is straightforward. We offer sample datasets containing categorization data for 100,000 representative domains across all taxonomies, allowing you to test integration, query performance, and data quality before commitment.

Our sales engineering team can provide technical consultation to help you determine the optimal architecture for your use case, whether that's pure offline database, pure API, or a hybrid approach. We can also assist with proof-of-concept implementations to validate performance and cost benefits in your specific environment.

The evaluation process typically includes reviewing your technical requirements and use case, providing appropriate sample data in your preferred format, technical consultation on implementation approach, access to documentation and code examples, and optionally, a pilot deployment with a subset of your production traffic.

Ready to Deploy the Offline Database?

Contact our team to discuss your requirements and get started with a sample dataset.

Explore Database Options